Track

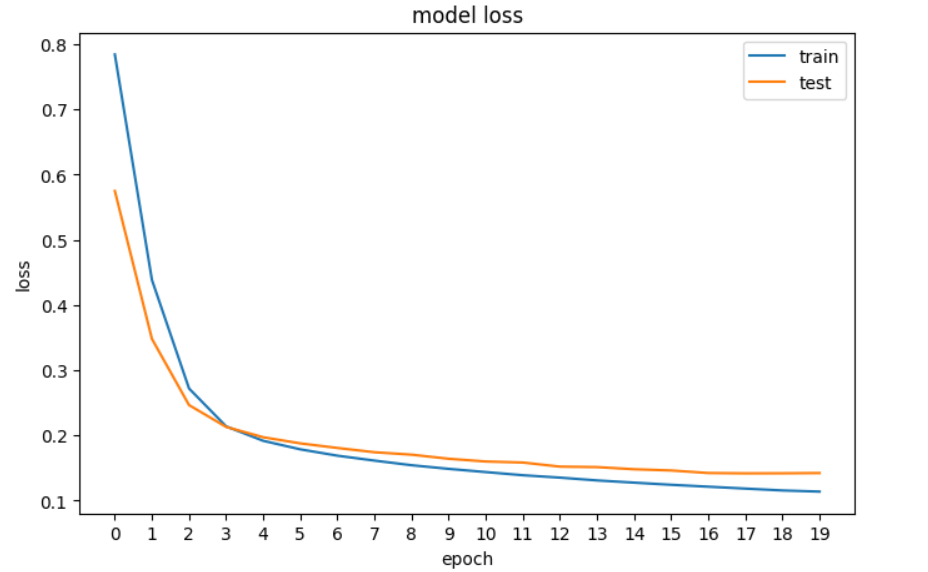

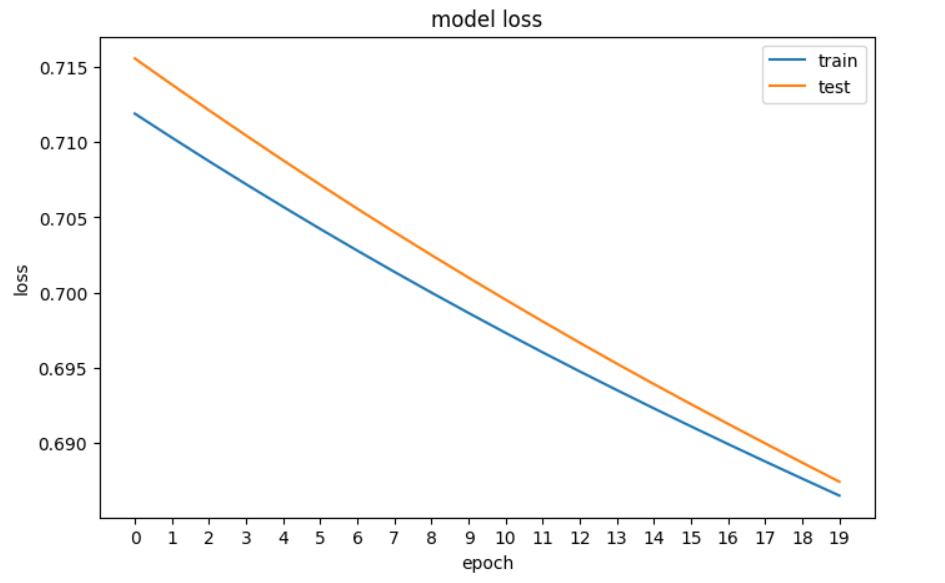

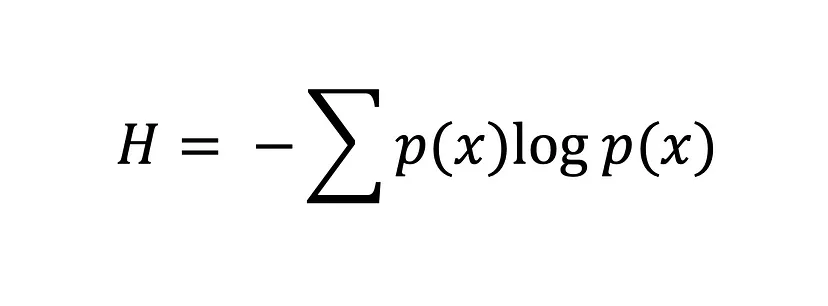

Cross-Entropy Loss Function in Machine Learning: Enhancing Model Accuracy

Explore cross-entropy in machine learning in our guide on optimizing model accuracy and effectiveness in classification with TensorFlow and PyTorch examples.

Jan 2024 · 12 min read

Start Your Machine Learning Journey Today!

44hrs hr

Course

Introduction to Deep Learning in Python

4 hr

245.2K

Track

Deep Learning

16hrs hr

See More

RelatedSee MoreSee More

blog

The Top 20 Deep Learning Interview Questions and Answers

Dive into the top deep learning interview questions with answers for various professional profiles and application areas like computer vision and NLP

Iván Palomares Carrascosa

tutorial

An Introduction to the Mamba LLM Architecture: A New Paradigm in Machine Learning

Discover the power of Mamba LLM, a transformative architecture from leading universities, redefining sequence processing in AI.

Kurtis Pykes

9 min

tutorial

A Beginner's Guide to Azure Machine Learning

Explore Azure Machine Learning in our beginner's guide to setting up, deploying models, and leveraging AutoML & ML Studio in the Azure ecosystem.

Moez Ali

11 min

tutorial

ML Workflow Orchestration With Prefect

Learn everything about a powerful and open-source workflow orchestration tool. Build, deploy, and execute your first machine learning workflow on your local machine and the cloud with this simple guide.

Abid Ali Awan

tutorial

An Introduction to Vector Databases For Machine Learning: A Hands-On Guide With Examples

Explore vector databases in ML with our guide. Learn to implement vector embeddings and practical applications.

Gary Alway

8 min

code-along

Getting Started with Machine Learning Using ChatGPT

In this session Francesca Donadoni, a Curriculum Manager at DataCamp, shows you how to make use of ChatGPT to implement a simple machine learning workflow.

Francesca Donadoni

![Binary cross entropy formula. [Source: Cross-Entropy Loss Function]](https://images.datacamp.com/image/upload/v1704977071/image5_c852b97c8a.png)