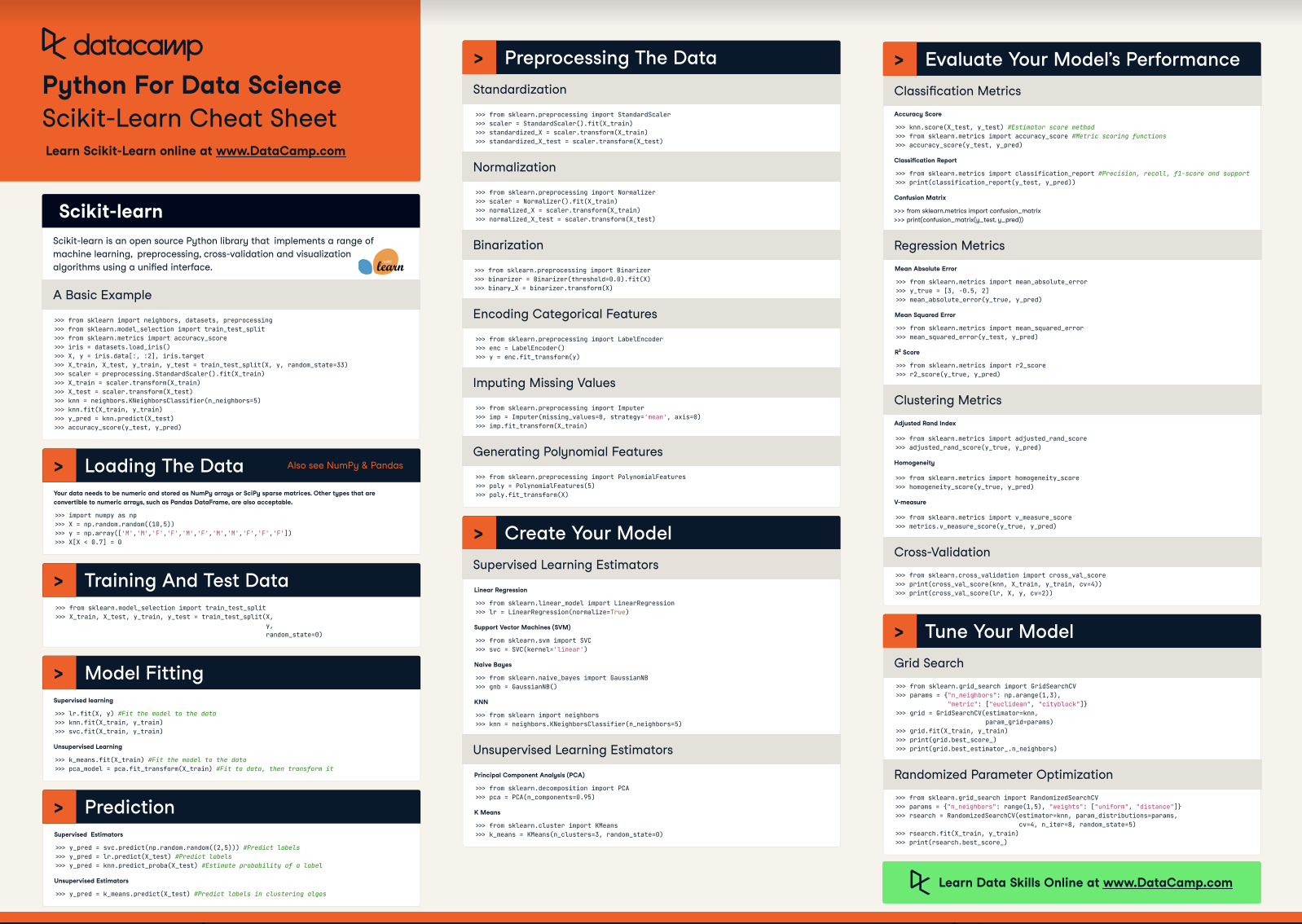

Scikit-Learn Cheat Sheet: Python Machine Learning

A handy scikit-learn cheat sheet to machine learning with Python, including some code examples.

May 2021 · 4 min read

RelatedSee MoreSee More

tutorial

A Comprehensive Tutorial on Optical Character Recognition (OCR) in Python With Pytesseract

Master the fundamentals of optical character recognition in OCR with PyTesseract and OpenCV.

Bex Tuychiev

11 min

tutorial

An Introduction to Vector Databases For Machine Learning: A Hands-On Guide With Examples

Explore vector databases in ML with our guide. Learn to implement vector embeddings and practical applications.

Gary Alway

8 min

tutorial

Encapsulation in Python Object-Oriented Programming: A Comprehensive Guide

Learn the fundamentals of implementing encapsulation in Python object-oriented programming.

Bex Tuychiev

11 min

tutorial

Python KeyError Exceptions and How to Fix Them

Learn key techniques such as exception handling and error prevention to handle the KeyError exception in Python effectively.

Javier Canales Luna

6 min

code-along

Full Stack Data Engineering with Python

In this session, you'll see a full data workflow using some LIGO gravitational wave data (no physics knowledge required). You'll see how to work with HDF5 files, clean and analyze time series data, and visualize the results.

Blenda Guedes

code-along

Getting Started with Machine Learning Using ChatGPT

In this session Francesca Donadoni, a Curriculum Manager at DataCamp, shows you how to make use of ChatGPT to implement a simple machine learning workflow.

Francesca Donadoni